Proud to be among the authors of the top 3 papers for 2020

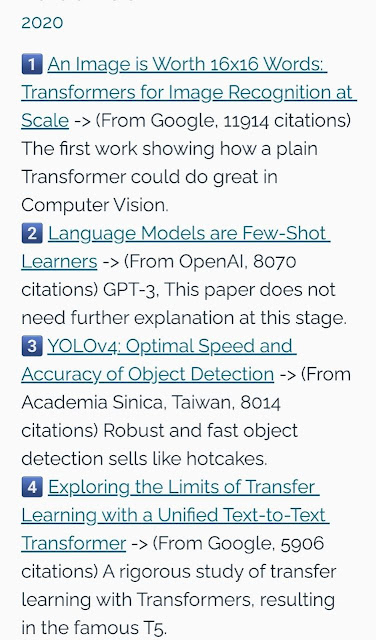

Proud to be among the authors of the top 3 papers for 2020: 1. ViT (Google) - Cited by 11914 papers / 12 authors. 2. GPT-3 (OpenAI) - Cited by 8070 papers / 31 authors. 3. YOLOv4 (Academia Sinica) - Cited by 8014 papers / 3 authors: Alexey Bochkovskiy, Chien-Yao Wang, Hong-Yuan Mark Liao ( google scholar ) From some discussion on Twitter: https://twitter.com/ylecun/status/1631793362767577088 Ranking based on Google scholar: https://www.zeta-alpha.com/post/must-read-the-100-most-cited-ai-papers-in-2022 -the-100-most-cited-ai-papers-in-2022